Lightcast labor market insights offer the most extensive data on labor market trends, job openings, workforce profiles, pay and compensation, career pathways, current and future in-demand skills, demographics, and more. Following the launch of Lightcast data shares, it’s now more seamless than ever to quickly dissect and analyze large datasets.

Want easy access to Lightcast data?

Talk to an expert to learn how

What are Lightcast Data Shares?

Lightcast data is now available through direct data shares on Databricks and other leading data warehouses, marketplaces, and cloud storage platforms.

Consuming labor market insights through data shares is easy, secure, and flexible. Data is shared directly with existing data warehouses or file storage platforms, which eliminates the need for complex or time-consuming technical lifting.

Data sharing is a seamless way to deliver data. Clients now have the option to slice, modify, or prepare data before integrating it into their solutions, either internally or externally. The data is always up-to-date and secure, as it’s shared directly with each client’s existing cloud storage or warehouse as platform-native sharing, a data feed, or both.

Leveraging data shares requires limited technical resources, which reduces costs and increases efficiencies. Data shares also simplify the process for customers to use Lightcast data for ingestion into large language models (LLMs), AI solutions, and predictive analytics models. Lightcast data shares can also be customized for each client and data share integration can be fully operational in less than 48 hours.

How Data Shares and Databricks Work Together

Databricks is one of many delivery destinations supported by Lightcast data shares. Lightcast creates a Databricks-to-Databricks Delta Share, which grants access for the configured Databricks workspace(s) to create a read-only catalog from the share to query within their workspace.

What if I Don’t Use Databricks?

Lightcast supports several data share destinations beyond Databricks: Amazon S3, Snowflake, Google BigQuery, Google Cloud Storage, Microsoft Azure Blob Storage, and SFTP.

How to Connect Databricks with Lightcast Data Shares

Lightcast requires the “Databricks Metastore ID” to grant your account access to the Delta share. A Metastore Admin (or another user with import create catalog and use provider) in the Databricks workspace that will consume the data must accept the Delta Share to make it available within the workspace.

Before configuring a destination, a share must be created. Lightcast requires clients to provide the following information for the target Databricks workspace:

Cloud

Region

Databricks Metastore ID

Consuming a Data Share in Databricks (Native Databricks Sharing)

To access data via Databricks UI:

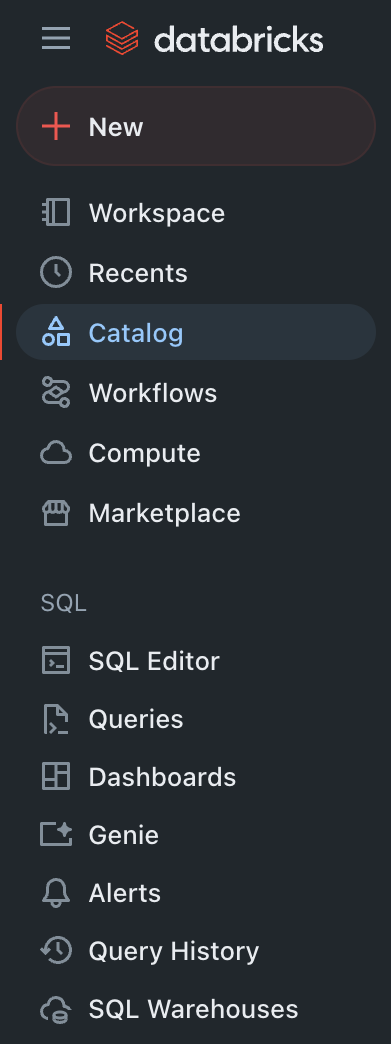

1. Navigate to Catalog

Use the sidebar on the left to locate the 'Catalog' tab.

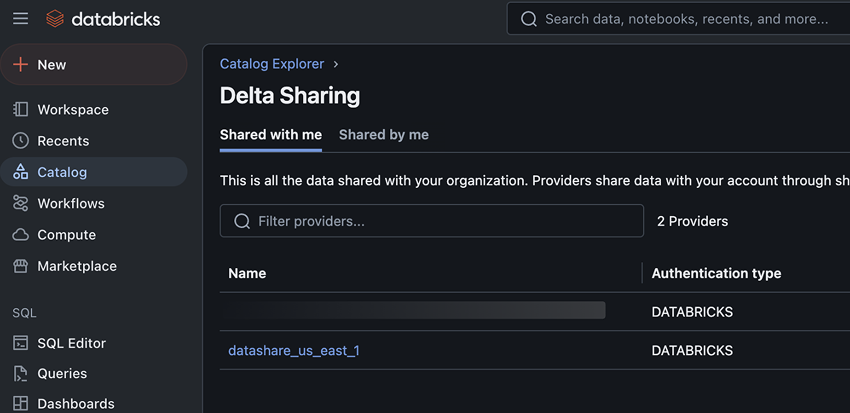

2. Select Delta Sharing

Use the filter tabs at the top of the page to locate the 'Delta Sharing' option.

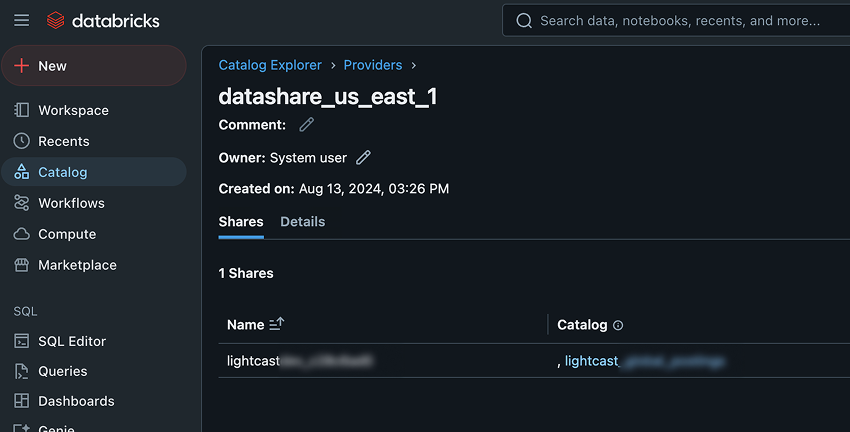

3. Select the Provider

Under the 'Shared With Me' tab, select the provider "datashare_[REGION]" from the list of available providers.

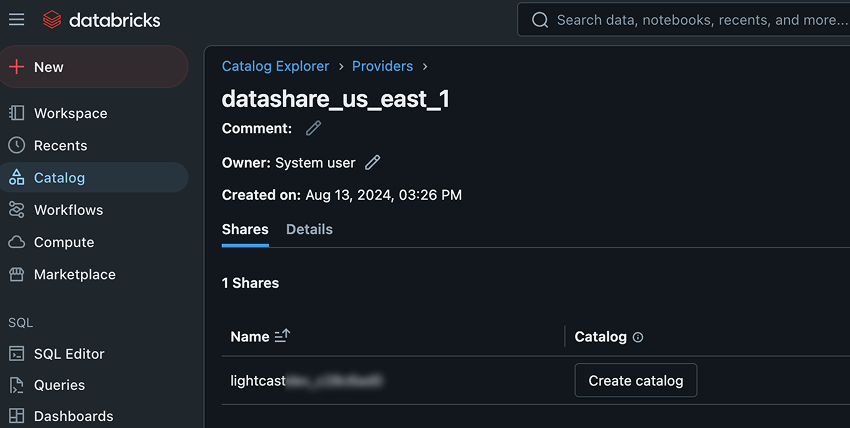

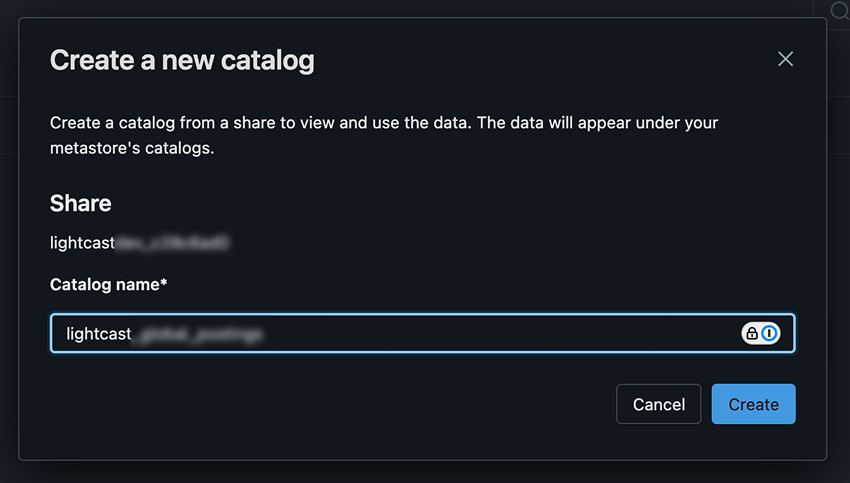

4. Create a Catalog

Under the 'Share' tab, your selected provider will be displayed with a 'Create Catalog' button next to it. Click the 'Create catalog' button.

5. Name the Catalog

Give the catalog a descriptive name so that you and your team will be able to identify it later.

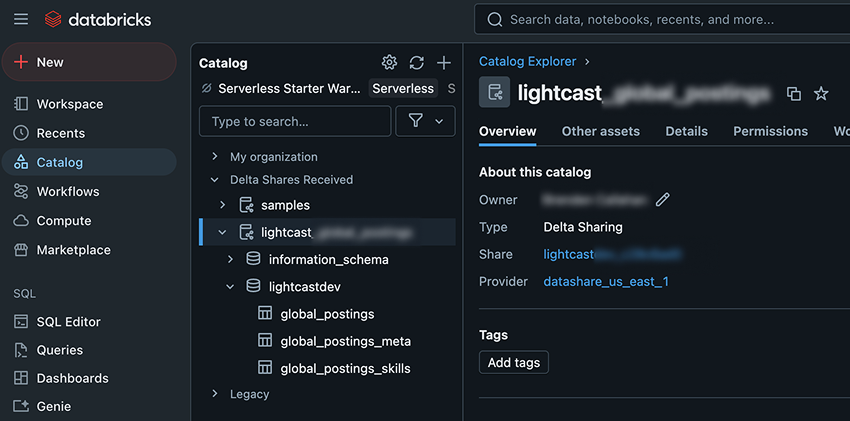

6. Find the Catalog

After creating the catalog the data is ready to be queried. Click the catalog name to be taken to the list of Databricks Catalogs available to you.

Learn more about Lightcast data shares or contact our team to discuss connecting Databricks with Lightcast data shares.